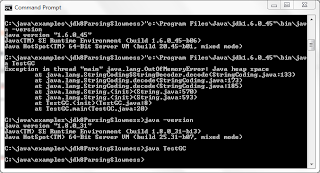

When it is necessary to determine which JDK version was used to compile a particular Java .class file, an approach that is often used is to use javap and to look for the listed "major version" in the javap output. I referenced this approach in my blog post Autoboxing, Unboxing, and NoSuchMethodError, but describe it in more detail here before moving onto how to accomplish this programatically.

The following code snippet demonstrates running javap -verbose against the Apache Commons Configuration class ServletFilterCommunication contained in commons-configuration-1.10.jar.

I circled the "major version" in the screen snapshot shown above. The number listed after "major version:" (49 in this case) indicates that the version of JDK used to compile this class is J2SE 5. The Wikipedia page for Java Class File lists the "major version" numerals corresponding to each JDK version:

| Major Version | JDK Version |

|---|---|

| 52 | Java SE 8 |

| 51 | Java SE 7 |

| 50 | Java SE 6 |

| 49 | J2SE 5 |

| 48 | JDK 1.4 |

| 47 | JDK 1.3 |

| 46 | JDK 1.2 |

| 45 | JDK 1.1 |

This is an easy way to determine the version of the JDK used to compile the .class file, but it can become tedious to do this on numerous classes in a directory or JAR files. It would be easier if we could programmatically check this major version so that it could be scripted. Fortunately, Java does support this. Matthias Ernst has posted "Code snippet: calling javap programmatically," in which he demonstrates use of JavapEnvironment from the JDK tools JAR to programatically perform javap functionality, but there is an easier way to identify the specific bytes of the .class file that indicate the version of JDK used for compilation.

The blog post "Identify Java Compiler version from Class Format Major/Minor version information" and the StackOverflow thread "Java API to find out the JDK version a class file is compiled for?" demonstrate reading the relevant two bytes from the Java .class file using DataInputStream.

Basic Access to JDK Version Used to Compile .class FileThe next code listing demonstrates the minimalistic approach to access a .class file's JDK compilation version.

final DataInputStream input = new DataInputStream(new FileInputStream(pathFileName)); input.skipBytes(4); final int minorVersion = input.readUnsignedShort(); final int majorVersion = input.readUnsignedShort();

The code instantiates a FileInputStream on the (presumed) .class file of interest and that FileInputStream is used to instantiate a DataInputStream. The first four bytes of a valid .class file contain numerals indicating it is a valid Java compiled class and are skipped. The next two bytes are read as an unsigned short and represent the minor version. After that comes the most important two bytes for our purposes. They are also read in as an unsigned short and represent the major version. This major version directly correlates with specific versions of the JDK. These significant bytes (magic, minor_version, and major_version) are described in Chapter 4 ("The class File Format") of The Java Virtual Machine Specification.

In the code listing above, the "magic" 4 bytes are simply skipped for convenience in understanding. However, I prefer to check those four bytes to ensure that they are what are expected for a .class file. The JVM Specification explains what should be expected for these first four bytes, "The magic item supplies the magic number identifying the class file format; it has the value 0xCAFEBABE." The next code listing revises the previous code listing and adds a check to ensure that the file in question in a Java compiled .class file. Note that the check specifically uses the hexadecimal representation CAFEBABE for readability.

final DataInputStream input = new DataInputStream(new FileInputStream(pathFileName));

// The first 4 bytes of a .class file are 0xCAFEBABE and are "used to

// identify file as conforming to the class file format."

// Use those to ensure the file being processed is a Java .class file.

final String firstFourBytes =

Integer.toHexString(input.readUnsignedShort())

+ Integer.toHexString(input.readUnsignedShort());

if (!firstFourBytes.equalsIgnoreCase("cafebabe"))

{

throw new IllegalArgumentException(

pathFileName + " is NOT a Java .class file.");

}

final int minorVersion = input.readUnsignedShort();

final int majorVersion = input.readUnsignedShort();

With the most important pieces of it already examined, the next code listing provides the full listing for a Java class I call ClassVersion.java. It has a main(String[]) function so that its functionality can be easily used from the command line.

import static java.lang.System.out;

import java.io.DataInputStream;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.util.Collections;

import java.util.HashMap;

import java.util.Map;

/**

* Prints out the JDK version used to compile .class files.

*/

public class ClassVersion

{

private static final Map<Integer, String> majorVersionToJdkVersion;

static

{

final Map<Integer, String> tempMajorVersionToJdkVersion = new HashMap<>();

tempMajorVersionToJdkVersion.put(45, "JDK 1.1");

tempMajorVersionToJdkVersion.put(46, "JDK 1.2");

tempMajorVersionToJdkVersion.put(47, "JDK 1.3");

tempMajorVersionToJdkVersion.put(48, "JDK 1.4");

tempMajorVersionToJdkVersion.put(49, "J2SE 5");

tempMajorVersionToJdkVersion.put(50, "Java SE 6");

tempMajorVersionToJdkVersion.put(51, "Java SE 7");

tempMajorVersionToJdkVersion.put(52, "Java SE 8");

majorVersionToJdkVersion = Collections.unmodifiableMap(tempMajorVersionToJdkVersion);

}

/**

* Print (to standard output) the major and minor versions of JDK that the

* provided .class file was compiled with.

*

* @param pathFileName Name of (presumably) .class file from which the major

* and minor versions of the JDK used to compile that class are to be

* extracted and printed to standard output.

*/

public static void printCompiledMajorMinorVersions(final String pathFileName)

{

try

{

final DataInputStream input = new DataInputStream(new FileInputStream(pathFileName));

printCompiledMajorMinorVersions(input, pathFileName);

}

catch (FileNotFoundException fnfEx)

{

out.println("ERROR: Unable to find file " + pathFileName);

}

}

/**

* Print (to standard output) the major and minor versions of JDK that the

* provided .class file was compiled with.

*

* @param input DataInputStream instance assumed to represent a .class file

* from which the major and minor versions of the JDK used to compile

* that class are to be extracted and printed to standard output.

* @param dataSourceName Name of source of data from which the provided

* DataInputStream came.

*/

public static void printCompiledMajorMinorVersions(

final DataInputStream input, final String dataSourceName)

{

try

{

// The first 4 bytes of a .class file are 0xCAFEBABE and are "used to

// identify file as conforming to the class file format."

// Use those to ensure the file being processed is a Java .class file.

final String firstFourBytes =

Integer.toHexString(input.readUnsignedShort())

+ Integer.toHexString(input.readUnsignedShort());

if (!firstFourBytes.equalsIgnoreCase("cafebabe"))

{

throw new IllegalArgumentException(

dataSourceName + " is NOT a Java .class file.");

}

final int minorVersion = input.readUnsignedShort();

final int majorVersion = input.readUnsignedShort();

out.println(

dataSourceName + " was compiled with "

+ convertMajorVersionToJdkVersion(majorVersion)

+ " (" + majorVersion + "/" + minorVersion + ")");

}

catch (IOException exception)

{

out.println(

"ERROR: Unable to process file " + dataSourceName

+ " to determine JDK compiled version - " + exception);

}

}

/**

* Accepts a "major version" and provides the associated name of the JDK

* version corresponding to that "major version" if one exists.

*

* @param majorVersion Two-digit major version used in .class file.

* @return Name of JDK version associated with provided "major version."

*/

public static String convertMajorVersionToJdkVersion(final int majorVersion)

{

return majorVersionToJdkVersion.get(majorVersion) != null

? majorVersionToJdkVersion.get(majorVersion)

: "Unknown JDK version for 'major version' of " + majorVersion;

}

public static void main(final String[] arguments)

{

if (arguments.length < 1)

{

out.println("USAGE: java ClassVersion <nameOfClassFile.class>");

System.exit(-1);

}

printCompiledMajorMinorVersions(arguments[0]);

}

}

The next screen snapshot demonstrates running this class against its own .class file.

As the last screen snapshot of the PowerShell console indicates, the version of the class was compiled with JDK 8.

With this ClassVersion in place, we have the ability to use Java to tell us when a particular .class file was compiled. However, this is not much easier than simply using javap and looking for the "major version" manually. What makes this more powerful and easier to use is to employ it in scripts. With that in mind, I now turn focus to Groovy scripts that take advantage of this class to identify JDK versions used to compile multiple .class files in a JAR or directory.

The next code listing is an example of a Groovy script that can make using of the ClassVersion class. This script demonstrates the version of JDK used to compile all .class files in a specified directory and its subdirectories.

#!/usr/bin/env groovy

// displayCompiledJdkVersionsOfClassFilesInDirectory.groovy

//

// Displays the version of JDK used to compile Java .class files in a provided

// directory and in its subdirectories.

//

if (args.length < 1)

{

println "USAGE: displayCompiledJdkVersionsOfClassFilesInDirectory.groovy <directory_name>"

System.exit(-1)

}

File directory = new File(args[0])

String directoryName = directory.canonicalPath

if (!directory.isDirectory())

{

println "ERROR: ${directoryName} is not a directory."

System.exit(-2)

}

print "\nJDK USED FOR .class COMPILATION IN DIRECTORIES UNDER "

println "${directoryName}\n"

directory.eachFileRecurse

{ file ->

String fileName = file.canonicalPath

if (fileName.endsWith(".class"))

{

ClassVersion.printCompiledMajorMinorVersions(fileName)

}

}

println "\n"

An example of the output generated by the just-listed script is shown next.

Another Groovy script is shown next and can be used to identify the JDK version used to compile .class files in any JAR files in the specified directory or one of its subdirectories.

displayCompiledJdkVersionsOfClassFilesInJar.groovy

#!/usr/bin/env groovy

// displayCompiledJdkVersionsOfClassFilesInJar.groovy

//

// Displays the version of JDK used to compile Java .class files in JARs in the

// specified directory or its subdirectories.

//

if (args.length < 1)

{

println "USAGE: displayCompiledJdkVersionsOfClassFilesInJar.groovy <jar_name>"

System.exit(-1)

}

import java.util.zip.ZipFile

import java.util.zip.ZipException

String rootDir = args ? args[0] : "."

File directory = new File(rootDir)

directory.eachFileRecurse

{ file->

if (file.isFile() && file.name.endsWith("jar"))

{

try

{

zip = new ZipFile(file)

entries = zip.entries()

entries.each

{ entry->

if (entry.name.endsWith(".class"))

{

println "${file}"

print "\t"

ClassVersion.printCompiledMajorMinorVersions(new DataInputStream(zip.getInputStream(entry)), entry.name)

}

}

}

catch (ZipException zipEx)

{

println "Unable to open file ${file.name}"

}

}

}

println "\n"

The early portions of the output from running this script against the JAR used at the first of this post is shown next. All .class files contained in the JAR have the version of JDK they were compiled against printed to standard output.

The scripts just shown demonstrate some of the utility achieved from being able to programatically access the version of JDK used to compile Java classes. Here are some other ideas for enhancements to these scripts. In some cases, I use these enhancements, but did not shown them here to retain better clarity and to avoid making the post even longer.

ClassVersion.javacould have been written in Groovy.ClassVersion.java's functionality would be more flexible if it returned individual pieces of information rather than printing it to standard output. Similarly, even returning of the entire Strings it produces would be more flexible than assuming callers want output written to standard output.- It would be easy to consolidate the above scripts to indicate JDK versions used to compile individual

.classfiles directly accessed in directories as well as.classfiles contained in JAR files from the same script. - A useful variation of the demonstrated scripts is one that returns all

.classfiles compiled with a particular version of JDK, before a particular version of the JDK, or after a particular version of the JDK.

The objective of this post has been to demonstrate programmatically determining the version of JDK used to compile Java source code into .class files. The post demonstrated determining version of JDK used for compilation based on the "major version" bytes of the JVM class file structure and then showed how to use Java APIs to read and process .class files and identify the version of JDK used to compile them. Finally, a couple of example scripts written in Groovy demonstrate the value of programatic access to this information.